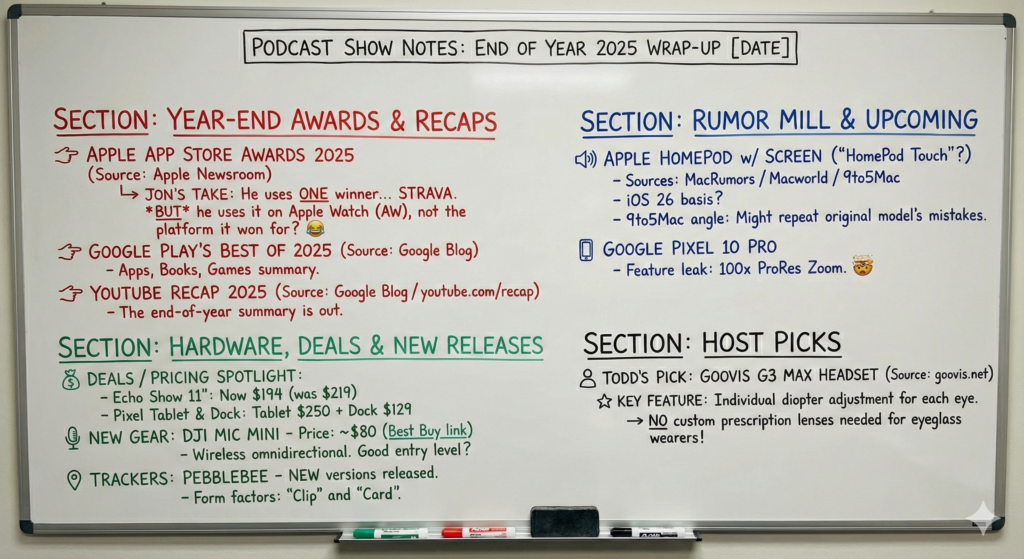

In this episode, Jon Westfall and I are joined by frequent guest panelist Sven Johannsen. We dive into the end-of-year wrap-ups: We discuss Apple’s 2025 App Store Awards, with Jon noting that he uses award-winner Strava—though primarily on the Apple Watch rather than its main platform. The conversation also covers Google Play’s Best of 2025 (spanning apps, books, and games) and the latest YouTube End-of-Year Recap.

On the hardware front, the rumor mill is spinning regarding an Apple HomePod with a screen (potentially the “HomePod Touch”), with speculation pointing toward an 11-inch display. The hosts compare smart display pricing, weighing the Echo Show 11” ($194-$219) against the Pixel Tablet and Dock bundle ($250 + $129).

Sven’s new accessories are also highlighted, including the DJI Mic Mini at a competitive $80 and the new clip and card versions of Pebblebee Trackers.

Todd’s Segment features the Goovis G3 Max headset, praised for its individual diopter adjustment that allows eyeglass wearers to use the device without custom prescription lenses. The show wraps up with a mention of the Google Pixel 10 Pro and its headline feature: 100x ProRes zoom.

Available via Apple iTunes.

MobileViews YouTube Podcasts channel

MobileViews Podcast on Audible.com